Google has introduced and released Gemini 3, its most intelligent artificial intelligence model developed to date, featuring advanced reasoning and multimodal capabilities. As of today, Gemini 3 is accessible via the Gemini app, AI Studio, and Vertex AI. Details regarding the launch were shared by Google and Alphabet CEO Sundar Pichai, along with Google DeepMind executives Demis Hassabis and Koray Kavukcuoglu. This release marks the first time a Gemini version has been integrated into Search on its launch day, while the Gemini 3 Deep Think mode, designed for deeper reasoning, is currently undergoing final safety testing before release to Ultra subscribers.

Technical specs, performance results, and new developer tools

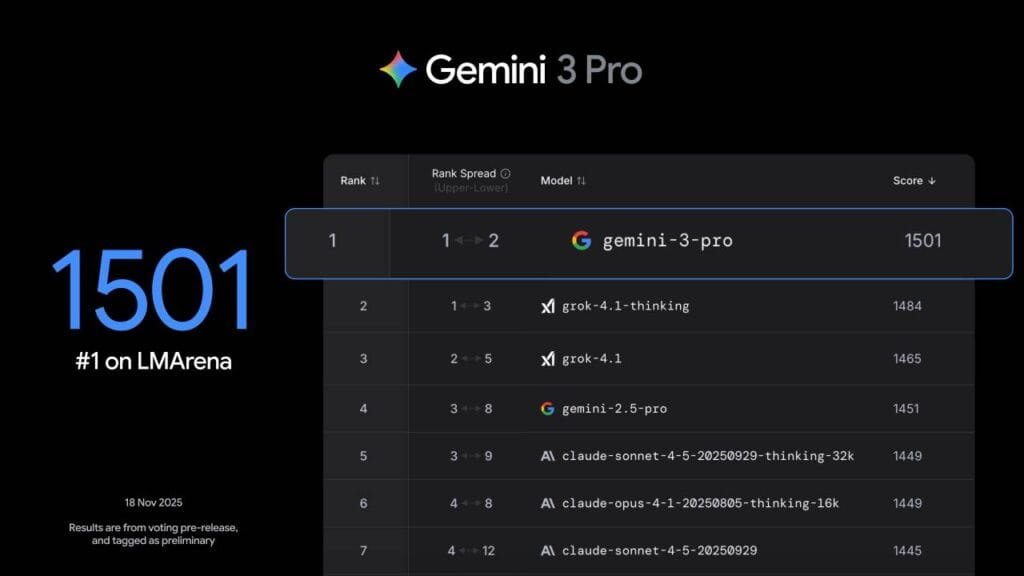

Since the inception of the Gemini era approximately two years ago, Google has expanded its AI product portfolio significantly. According to company data, AI Overviews in Search has reached 2 billion monthly users, while the Gemini app serves 650 million monthly users. More than 70% of Cloud customers utilize Google’s AI solutions, and 13 million developers have built applications using these generative models. Following Gemini 1’s introduction of native multimodality and long context windows, and Gemini 2’s foundation for agentic capabilities, Gemini 3 builds upon previous generations. It succeeds Gemini 2.5 Pro, which held the top position on LMArena for over six months.

Gemini 3 demonstrates higher performance in determining context and user intent, producing results with fewer prompts. The model moves beyond simple text and image processing to grasp situational nuances. Google is deploying this model at scale; AI Mode in Search has been updated with Gemini 3 to support more complex reasoning and new dynamic experiences. Additionally, the model is now available to developers through the new agent-based development platform Google Antigravity, as well as AI Studio and Vertex AI.

In terms of technical performance, Gemini 3 Pro outperforms Gemini 2.5 Pro across all major AI benchmarks. The model topped the LMArena Leaderboard with a score of 1501 Elo. It demonstrated PhD-level reasoning capabilities by scoring 37.5% on Humanity’s Last Exam (without tool use) and 91.9% on GPQA Diamond. Setting a new standard in mathematics, it achieved 23.4% on MathArena Apex. In multimodal reasoning, it reached scores of 81% on MMMU-Pro and 87.6% on Video-MMMU. On SimpleQA Verified, which measures factual accuracy, the model achieved a result of 72.1%.

The Gemini 3 Deep Think mode exceeds the standard model by offering increased capabilities in reasoning and multimodal understanding. In testing, Deep Think surpassed Gemini 3 Pro’s performance, reaching 41.0% on Humanity’s Last Exam and 93.8% on GPQA Diamond. Furthermore, it demonstrated the capacity to solve novel problems by scoring 45.1% on ARC-AGI-2, which involves code execution. This mode is currently being evaluated by safety testers before being offered to Google AI Ultra subscribers.

Designed from the ground up for learning and information synthesis, Gemini operates with a 1 million-token context window, processing text, images, video, audio, and code. The model can decipher and translate handwritten recipes in various languages or analyze academic papers and long videos to create interactive flashcards. By analyzing video footage of sports activities, it identifies areas for improvement and generates training plans. Powered by Gemini 3 infrastructure, AI Mode in Search instantly generates visual layouts and simulations based on user queries.

In software development, Gemini 3 is distinguished by its coding and agentic coding capabilities. It reached the top of the WebDev Arena leaderboard with 1487 Elo points and scored 54.2% on Terminal-Bench 2.0, which tests computer use via terminal. On SWE-bench Verified, which measures coding agents, it performed at 76.2%. Developers can work with the model via Google AI Studio, Vertex AI, Gemini CLI, and third-party platforms such as Cursor, GitHub, JetBrains, Manus, and Replit.

Google also announced “Google Antigravity,” a new agent-based development platform that transforms AI assistance into an active partner. AI agents operating within Antigravity have direct access to the editor, terminal, and browser, allowing them to plan and execute complex software tasks autonomously while validating their own code. The platform works in integration with Gemini 3 Pro, as well as the Gemini 2.5 Computer Use model for browser control and the Nano Banana (Gemini 2.5 Image) model for image editing.

Gemini 3 has made progress in long-term planning capabilities, topping the leaderboard on Vending-Bench 2, which involves managing a simulated vending machine business. The model managed the process consistently over a simulated year without deviating from the task. These capabilities enable the model to execute multi-step personal workflows, such as organizing Gmail inboxes or scheduling appointments, under user control. Google AI Ultra subscribers can experience these capabilities through the Gemini Agent feature.

Regarding safety protocols, Gemini 3 has undergone the most comprehensive evaluations of any Google model. A reduction in sycophancy (bias toward user agreement) and increased resistance to cyberattacks and prompt injections were observed. Testing processes involved collaboration with independent bodies and experts, including the UK AISI, Apollo, Vaultis, and Dreadnode.

As of today, Gemini 3 is available to general users on the Gemini app and to Google AI Pro and Ultra subscribers via AI Mode in Search. Developers can access the model through AI Studio and Google Antigravity, while enterprise customers can use Vertex AI and Gemini Enterprise. The Gemini 3 Deep Think mode will be released in the coming weeks following the completion of additional safety evaluations. The company indicates that additional models will be added to the Gemini 3 series soon.